101 Concepts for the Level I Exam

Essential Concept 10: Data Prep & Wrangling

![]()

Structured data

For structured data, data preparation and wrangling involve data cleansing and data preprocessing.

Data cleansing involves resolving:

- Incompleteness errors: Data is missing.

- Invalidity errors: Data is outside a meaningful range.

- Inaccuracy errors: Data is not a measure of true value.

- Inconsistency errors: Data conflicts with the corresponding data points or reality.

- Non-uniformity errors: Data is not present in an identical format.

- Duplication errors: Duplicate observations are present.

Data preprocessing involves performing the following transformations:

- Extraction: A new variable is extracted from the current variable for ease of analyzing and using for training the ML model.

- Aggregation: Two or more variables are consolidated into a single variable.

- Filtration: Data rows not required for the project are removed.

- Selection: Data columns not required for the project are removed.

- Conversion: The variables in the dataset are converted into appropriate types to further process and analyze them correctly.

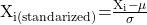

Scaling is the process of adjusting the range of a feature by shifting and changing the scale of data. Two common methods used for scaling are:

- Normalization:

- Standardization:

Unstructured data

For unstructured data, data preparation and wrangling involve a set of text-specific cleansing and preprocessing tasks.

Text cleansing involves removing the following unnecessary elements from the raw text:

- Html tags

- Most punctuations

- Most numbers

- White spaces

Text preprocessing involves performing the following transformations:

- Tokenization: The process of splitting a given text into separate tokens where each token is equivalent to a word.

- Normalization: The normalization process involves the following actions:

- Lowercasing – Removes differences among the same words due to upper and lower cases.

- Removing stop words – Stop words are commonly used words such a ‘the’, ‘is’ and ‘a’.

- Stemming – Converting inflected forms of a word into its base word.

- Lemmatization – Converting inflected forms of a word into its morphological root (known as lemma). Lemmatization is a more sophisticated approach as compared to stemming and is difficult and expensive to perform.

- Creating bag-of-words (BOW): It is a collection of distinct set of tokens that does not capture the position or sequence of the words in the text.

- Organizing the BOW into a Document term matrix (DTM): It is a table, where each row of the matrix belongs to a document (or text file), and each column represents a token (or term). The number of rows is equal to the number of documents in the sample dataset. The number of columns is equal to the number of tokens in the final BOW. The cells contain the counts of the number of times a token is present in each document.

- N-grams and N-grams BOW: In some cases, a sequence of words may convey more meaning than individual words. N-grams is a representation of word sequences. The length of a sequence varies from 1 to n. A one-word sequence is a unigram; a two-word sequence is a bigram; and a 3-word sequence is a trigram; and so on.

Share on :